I coded a Craigslist bot that scrapes all the new listings for a specific category you're looking in and auto-emails each of the vendors. It was an interesting exercise in crawling that operates similarly to my C search engine here: https://github.com/deloschang/search-engine.

Why? I'm tired of browsing all the Craigslist postings and emailing each person. I thought it'd be a fun solution to auto-email the vendors, as I was going to email the same thing anyway.

In Python, BeautifulSoup parses the page for the Craigslist links. It saves them to a postgres database so that it won't duplicate-email the same URL. It also "intelligently" remembers the name of the Craigslist posting so if somebody creates a duplicate with the same name to readvertise, it won't double-email them.

I've left it on overnight and it makes browsing Craigslist a lot easier.:

https://github.com/deloschang/craigslist-bot

Sunday, June 2, 2013

Saturday, April 20, 2013

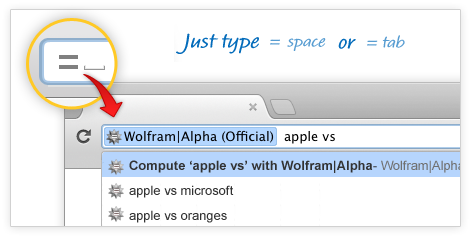

Faster Google Searching on Chrome

I love Chrome's Omnibox.

But sometimes, I know what I'm searching for is the top result. So I'd like to use I'm Feeling Lucky in the Omnibox. That way, my searches can be faster. Here's a simple way to do this:

Go to Settings in Chrome. Under the Search heading label, click Manage Search Engines. Scroll all the way down to the 3 input boxes:

Add a new search engine | Keyword | URL with %s in place

Fill in:

Add a new search engine: Lucky

Keyword: (This is your shortcut that you type into the URL bar) then you press tab. I use "\"

URL:

Click Done.

Now you can go to your URL bar (Cmd-l), type in "\" then press <Tab> and you can search "I'm Feeling Lucky" in Omnibox!

But sometimes, I know what I'm searching for is the top result. So I'd like to use I'm Feeling Lucky in the Omnibox. That way, my searches can be faster. Here's a simple way to do this:

Go to Settings in Chrome. Under the Search heading label, click Manage Search Engines. Scroll all the way down to the 3 input boxes:

Add a new search engine | Keyword | URL with %s in place

Fill in:

Add a new search engine: Lucky

Keyword: (This is your shortcut that you type into the URL bar) then you press tab. I use "\"

URL:

{google:baseURL}search?{google:RLZ}{google:acceptedSuggestion}{google:originalQueryForSuggestion}sourceid=chrome&ie={inputEncoding}&q=%s&btnI

Click Done.

Now you can go to your URL bar (Cmd-l), type in "\" then press <Tab> and you can search "I'm Feeling Lucky" in Omnibox!

Sunday, April 7, 2013

Presenting Course Scheduler App at Tuck Business School

Last Friday, I demoed my course scheduler app at Tuck Business School.

I built the demo in 2 days, Wednesday and Thursday. It helps students auto-schedule their courses by entering in their course dept and number and the Google Calendar API. It parses the Dartmouth Oracle Timetable here: http://oracle-www.dartmouth.edu/dart/groucho/timetable.main and references the time slot and location. Example: try using COSC 065 for the Spring 2013 (This wouldn't work when the Fall Timetable is up).

I anticipate building a syllabus parser that goes through syllabuses and extracts more detailed information that would make the app more useful: textbook required (we could cross-reference this and even try to find a pdf for the user), course X-hrs.

The feedback we received from the Tuck judges and other students was motivating! People thought it was cool and wanted to see it expand to reach all the other possibilities.

Monday, April 1, 2013

Course Auto-scheduler App Improvements!

Though I haven't yet set up any DNS (potentially hooking it up to Hacker's Club), the app is available.

Features:

- Enter your class like so: "COSC 050" and it will lookup the class time and add it to your calendar automatically.

- You can see a full listing of Dartmouth courses here: http://oracle-www.dartmouth.edu/dart/groucho/timetable.display_courses

- You can see full period times here: http://oracle-www.dartmouth.edu/dart/groucho/timetable.display_courses

Stuff learned:

- The Google Calendar API v3 is pretty inconsistent. It took a lot of digging to find out how to craft the correct OAuth2 token dance and event array.

- Heroku is awesome for deploying Django! Before, I used to use a git post-receive hook to connect to Amazon EC2, but Heroku makes it so much easier.

- How to config for separate environments. Before, I used a settings_local file that wasn't committed by git (sensitive db information). Now, os environ configs do the trick.

Stuff to-do:

- Handle the edge cases (like period times: AR, special times on timetable (where it's not an existing period))

- Add AJAX lookups for the correct class names (this will make user entry easier)

Sunday, March 31, 2013

Course Auto-scheduler Webapp

https://github.com/deloschang/auto_class_scheduler

This app uses Google's oAuth2 Calendar API to automatically schedule your classes onto calendar.

It does this by scraping the Timetable for all available courses and cross-references them with the Dartmouth Meeting Time diagram here: http://oracle-www.dartmouth.edu/dart/groucho/timetabl.diagram.

All this information is loaded into a Postgres database and referenced for Calendar API event insertions.

This app uses Google's oAuth2 Calendar API to automatically schedule your classes onto calendar.

It does this by scraping the Timetable for all available courses and cross-references them with the Dartmouth Meeting Time diagram here: http://oracle-www.dartmouth.edu/dart/groucho/timetabl.diagram.

All this information is loaded into a Postgres database and referenced for Calendar API event insertions.

Thursday, March 28, 2013

API Improvements for FoCo Nutrition Scraper App

I built a FoCo (Dartmouth's dining hall) nutrition scraper API app that lets you query by year / month / day and returns a JSON listing of food offered in the dining hall that day, with associated nutrition facts for every single food item.

https://github.com/deloschang/foco-nutrition-api

Integrated this with a mobile app that lets me create my own "food diary" application. I can enter in my food portions and calculate exactly how much macronutrients I'm taking in.

Eventually, we plan to merge this in with Timely as a zero-action feature. Imagine walking into FoCo and Timely pulls up the food offered that day with the associated nutrition facts seamlessly and automatically.

https://github.com/deloschang/foco-nutrition-api

Integrated this with a mobile app that lets me create my own "food diary" application. I can enter in my food portions and calculate exactly how much macronutrients I'm taking in.

Eventually, we plan to merge this in with Timely as a zero-action feature. Imagine walking into FoCo and Timely pulls up the food offered that day with the associated nutrition facts seamlessly and automatically.

Monday, March 25, 2013

App for watching Courses automatically

For all the Dartmouth students who are struggling to find their Spring courses, I wrote an app that will monitor full courses and tell you when there is an availability via email.

Here is the code on github:

https://github.com/deloschang/course-watch/blob/master/coursemonitor.py

Basically, given the class you want to search, it scrapes Dartmouth's Course timetable online via post requests and then looks for the appropriate cells. Then it will sleep for half a minute, or however long you want, before checking again. If there is an availability, it will notify you via email.

Feel free to fork and improve on it.

Here is the code on github:

https://github.com/deloschang/course-watch/blob/master/coursemonitor.py

Basically, given the class you want to search, it scrapes Dartmouth's Course timetable online via post requests and then looks for the appropriate cells. Then it will sleep for half a minute, or however long you want, before checking again. If there is an availability, it will notify you via email.

Feel free to fork and improve on it.

Saturday, March 23, 2013

App for Markov Chaining Facebook statuses

As a small but cool programming project, I thought it'd be interesting to Markov chain friend FB statuses. The memory-less nature of Markov chains make it pretty simple to implement with a dictionary and a random function in Python: http://en.wikipedia.org/wiki/Markov_chain.

This project was pretty straightforward but still interesting. It was mainly to experiment with Markov chains. I had originally wanted to do FFT or fast fourier transforms to identify frequencies in music and then Markov chain the different notes together. It would be extra cool if we could simply enter different YouTube links in and have them parsed.

The source is here: https://github.com/deloschang/markov-chain-fb-statuses

Sample result:

In general, a larger corpus yields "higher-quality" results. So I set off to scrape as much as I could from my Facebook friends. Here's how I did it

First, I implemented Facebook Auth with sufficient permissions to check friend statuses. With the access token in hand, my objective was to iterate through each of the statuses and scrape the message. Also, any comments from the user within those status too.

Facebook limits the number of statuses per API call so the offset parameter will need to be looped like so:

The 'not not' is quite pythonic and checks if we've reached the end of all the status update loops. If so, Facebook API will return with empty data.

Once I scraped the API calls, I just needed to save them to an external file. Then Markov chaining them involved iterating through each line, splitting the words and placing them in correct key value pairs.

In the near future, beyond uploading it onto a server, I probably won't be updating this project further. But here are some interesting ideas for anybody that wants to fork this repo.

This project was pretty straightforward but still interesting. It was mainly to experiment with Markov chains. I had originally wanted to do FFT or fast fourier transforms to identify frequencies in music and then Markov chain the different notes together. It would be extra cool if we could simply enter different YouTube links in and have them parsed.

The source is here: https://github.com/deloschang/markov-chain-fb-statuses

Sample result:

In general, a larger corpus yields "higher-quality" results. So I set off to scrape as much as I could from my Facebook friends. Here's how I did it

First, I implemented Facebook Auth with sufficient permissions to check friend statuses. With the access token in hand, my objective was to iterate through each of the statuses and scrape the message. Also, any comments from the user within those status too.

Facebook limits the number of statuses per API call so the offset parameter will need to be looped like so:

full_data = graph.get(FB_DESIGNATED+'/statuses?limit=100&offset='+str(offset))

....open corpus....

while not not full_data['data']:

...... scrape the data here .......

offset += 100

full_data = graph.get(FB_DESIGNATED+'/statuses?limit=100&offset='+str(offset))

corpus.close()

The 'not not' is quite pythonic and checks if we've reached the end of all the status update loops. If so, Facebook API will return with empty data.

Once I scraped the API calls, I just needed to save them to an external file. Then Markov chaining them involved iterating through each line, splitting the words and placing them in correct key value pairs.

In the near future, beyond uploading it onto a server, I probably won't be updating this project further. But here are some interesting ideas for anybody that wants to fork this repo.

- Integrating with twitter. You could go into friend's about mes and look for Twitter IDs. Then scrape the twitter posts for a larger corpus.

- Virality. Give every person their own page for Markov chaining their statuses. Then when friends Markov chain each other, they can simply link their friend to that page via automated FB post. And when those friends come check their page out, encourage them to Markov chain their own friends, hopefully leading to a viral coefficient > one.

- Integrate with photos. As a developer who worked on an Internet memes startup, it'd be sweet to add these Markov chained texts as captions to random friend photos. I don't think this has been done yet and it'd be really interesting to see the results.

Actually.... now that I've listed these ideas out, I'm a little tempted....

Thursday, March 21, 2013

Concerning Privacy Concerns

I'm seeing many "data-learning" apps shy from speaking up about the data-collection portion of their application and how that affects user privacy. How much data are you collecting? Where is it all going? It's intuitive: people like privacy and if you collect more data about them, they'll protest and leave. Right?

This isn't a post about privacy concerns.

Rather, it's a post about how we should be asking for more data.

What?

Like Timely, these are the kinds of apps that gather data about you and process it to offer you some service, hence some value. To clarify, these are not apps that collect data they don't necessarily need (doesn't that remind you of spyware?). That's a big distinction that I think people conflate so often that in most people's minds, data collection = bad.

I believe that apps like Timely that can leverage more data to provide more value shouldn't fear. Of course, it needs to be a sufficient amount of value or people wouldn't risk their personal data to even use it.

The privacy status-quo is changing. Look at how people gave up their privacy for Facebook. Sure, there are privacy concerns about where our data is going and how people are using it, but overall, it seems that becoming more socially interconnected was worth the price. The common response now to privacy evangelists is "if you want to keep your data, don't use Facebook."

Leveraging more high-quality data spurs innovation. It unlocks doors. Big Data, though hyped, is real and it's powerful. From an application point of view, data is data is data. Higher quality data intelligently processed means more value, although this isn't always the case.

The problem for developers is that talking about how you want more user data isn't just eccentric, it's creepy by current social standards. So many app developers tiptoe around this by choosing not to talk about it or obfuscating the topic.

It's obvious they aren't following through with their convictions because they fear negative backlash. That's fair; people tend to be conservative with their personal data. But I'm curious.

What if they had followed through on this insight?

What's possible?

This isn't a post about privacy concerns.

Rather, it's a post about how we should be asking for more data.

What?

Like Timely, these are the kinds of apps that gather data about you and process it to offer you some service, hence some value. To clarify, these are not apps that collect data they don't necessarily need (doesn't that remind you of spyware?). That's a big distinction that I think people conflate so often that in most people's minds, data collection = bad.

I believe that apps like Timely that can leverage more data to provide more value shouldn't fear. Of course, it needs to be a sufficient amount of value or people wouldn't risk their personal data to even use it.

The privacy status-quo is changing. Look at how people gave up their privacy for Facebook. Sure, there are privacy concerns about where our data is going and how people are using it, but overall, it seems that becoming more socially interconnected was worth the price. The common response now to privacy evangelists is "if you want to keep your data, don't use Facebook."

Leveraging more high-quality data spurs innovation. It unlocks doors. Big Data, though hyped, is real and it's powerful. From an application point of view, data is data is data. Higher quality data intelligently processed means more value, although this isn't always the case.

The problem for developers is that talking about how you want more user data isn't just eccentric, it's creepy by current social standards. So many app developers tiptoe around this by choosing not to talk about it or obfuscating the topic.

It's obvious they aren't following through with their convictions because they fear negative backlash. That's fair; people tend to be conservative with their personal data. But I'm curious.

What if they had followed through on this insight?

What's possible?

Tuesday, March 19, 2013

Playing with Bashrc

Recently discovered how time-saving aliases are. For git, the following are what I use the most:

Or for a bash script that commits and pushes to server, you could write:

Also, essential for aliasing is:

This will let you edit bashrc and then source it automatically afterwards.

As I commit pretty often, these aliases have saved me quite a bit of time.

alias gp='git push'

alias glog='git log'

alias gs='git status'

# for easy git committing

function gc() {

git commit -m "$*"

}

Because alias doesn't accept parameters, function gc() allows you to type in something like:

gc this is my commit

Or for a bash script that commits and pushes to server, you could write:

echo "Please type commit message"read commit_messagegit commit -am "$commit_message"git push"Pushing onto repo"

Also, essential for aliasing is:

alias bashrc='mvim ~/.bashrc && source ~/.bashrc'

This will let you edit bashrc and then source it automatically afterwards.

As I commit pretty often, these aliases have saved me quite a bit of time.

Saturday, March 16, 2013

GDB Set disassembly

Recently saw that many linux books are referencing "set dis intel" or "set disassembly intel" when looking at breakpoints and registers. In OSX, this spits out the ambiguous response "Ambiguous set command."

This can be fixed using "set disassembly-flavor intel." And to preserve it on startup, simply set it in ~/.gdbinit via sudo echo "set disassembly-flavor intel" > ~/.gdbinit

This can be fixed using "set disassembly-flavor intel." And to preserve it on startup, simply set it in ~/.gdbinit via sudo echo "set disassembly-flavor intel" > ~/.gdbinit

Tuesday, March 12, 2013

Seed funding for Timely!

It's been a busy but great week so far. We spent about 1.5 weeks building a beta product and received $2000 in seed funding.

What is Timely?

At Timely, we believe that time management is broken.

- When we think about time management, it means inputting events in the future and then trying to budget our time based on how long we think our tasks will take. But this is crazy! Why are we budgeting our most valuable asset based on guessed estimations? It'd be unwise to budget our money that way.

- There's no easy way to look back and evaluate how you've spent your time. You could open your calendar, but you'd really only see fixed events. There are some applications like RescueTime (which is a great solution for tracking time on your computer) to other automatic time-tracking applications. But many applications we've used are pretty disconnected from our lives. We're changing that.

We're incredibly excited about Timely, not only because it's an idea that solves our own problems, but because it's also an interesting programming challenge. It seems to me that smartphones are still in their nascency -- location-tracking and memory capabilities are limited and have a long way to go. In fact, Paul Graham notes that getting to the edge of smartphone programming could take a year.

Sunday, February 17, 2013

OAuth Complications

Recently, tackling an interesting problem regarding OAuth 2. We want to allow the user to be able to register via Google OAuth on a mobile app and simultaneously store their records on the server (email address etc.) In addition to that, we also need offline access via a token (refresh token). If the user registers via the mobile app, we need to grab this offline access token, send it to the server, which should return a cookie. Then from there, the mobile app can process as it normally does and push the requisite data to the server.

Update:

Seems like Google Play Services only allows you to get an access token (not a refresh token), which is a shame because the Account Chooser is a pretty slick feature (no need for the user to enter in a username or password). I added in a quick async task to check what the token is, spitting it out onto LogCat.

Update:

Seems like Google Play Services only allows you to get an access token (not a refresh token), which is a shame because the Account Chooser is a pretty slick feature (no need for the user to enter in a username or password). I added in a quick async task to check what the token is, spitting it out onto LogCat.

// Fetches the token successfully and shows in LogCat as async thread

private class fetchToken extends AsyncTask<Void, Void, Void> {

/* (non-Javadoc)

* @see android.os.AsyncTask#doInBackground(Params[])

*/

@Override

protected Void doInBackground(Void... params) {

// TODO Auto-generated method stub

try {

String token = credential.getToken();

Log.d("calendar", token);

} catch (IOException exception) {

// TODO Auto-generated catch block

exception.printStackTrace();

} catch (GoogleAuthException exception) {

// TODO Auto-generated catch block

exception.printStackTrace();

}

return null;

}

}

Returning: ya29.AHE..... (an access token).

The challenge is to figure out how to get a refresh token for the server, as we cannot assume that the user will onboard on the web app first.

Sunday, February 10, 2013

Memeja YCombinator Interview Experience

I wanted to collect my thoughts before posting on Memeja again.

Here's brief timeline of our progress:

- Spring 2012: Won $16,500 at Dartmouth's Entrepreneurship Competition: http://thedartmouth.com/2012/04/06/news/des

- Summer 2012: Hardcore development begins. Iterated different prototypes and interviewed students for market feedback.

- Fall 2012: Moved to San Francisco with another co-founder.

- Demo'ed Memeja with UC Berkeley students. Iterated based on needs.

- 8-11 hours of coding everyday (according to RescueTime app).

In Nov 2012, Memeja interviewed for the YCombinator Winter 2013 batch.

Here's our application video: http://www.youtube.com/watch?v=hdpegsikzhI. (just one small video piece of a written app)

We read somewhere that 10% of applicants get an interview, so needless to say, we were excited. We started to dream, which I think anybody can relate to. Because at that moment, our derivative seemed so positive.

YCombinator interviews are quite short: 10 minutes, in total.

-

What did we do to prepare?

We spent a majority of the 2 weeks before the interview talking to YCombinator alums for advice. We also spent lots of time coding new features based on our analysis of the market feedback. We drilled the common questions, ad nauseum, until we could reply to the following questions in 15 seconds or less:

- How are you going to make money?

- Who needs this application? How do you know they need it?

- What is Memeja? etc.

It turns out, none of this helped a great deal. In retrospect, we should have spent the majority of the time actually spreading word about the product to gain enormous traction (we had < 100 users from UC Berkeley at that point).

Three days before the actual inteview, we went to the YCombinator HQ in Mountain View every day. I would recommend any prospective interviewee to do the same! It's absolutely amazing to see what other people are working on. We saw things from 3D printing vending machines to a Yelp for people.

We eventually saw that we were to interview with Paul Graham. One of my tech heroes! It was unbelievable. But we had also heard from other YC alums that PG was incredibly skeptical of Internet memes. Looking on the bright side, we thought that if we could convince PG, we could convince anybody.

When we were called for the actual interview, Max and I breathed each other in, which is a trick I learned from Acting class at Dartmouth. We make eye contact and breathe synchronously.

I remember walking into the interview room and shaking hands with Paul Graham and the other YCombinator partners.

-

The Actual Interview

Everything went by so quickly, it's hard to remember precisely what happened. Some questions I do remember...

- Why aren't we based at Dartmouth instead of SF? (we wanted to be in a startup hub)

- Why hasn't this been done already?

I do remember Paul Graham scrolling through our live feed for at least a minute, saying nothing. That was the most intimidating portion of the interview.

Because Memeja is a social network based on memes, most of the rage comics are not under our control but rather, inside jokes between UC Berkeley students.

I remember all Paul Graham said at the end, scrolling through them was that "they were incomprehensible." Funny in retrospect, but quite nerve-wracking in the moment. As a consolation, PG said that he could see people using rage comics to send each other stories. He also commented that Dartmouth was "very hip" for awarding $16,500 to a memes startups.

-

Exiting the interview, Max and I agreed that the interview wasn't as intense as we thought it would be. Therein was the worry though!

I could be wrong (as I only have one data point) but I suspect that the intensity of the interview is a proxy for their interest.

-

Overall, it was an interesting experience, an interview that was far different from other interviews I've been through.

Saturday, February 2, 2013

Reverse Engineering the Dartmouth Nutrition Menu Pt 2

From the first part, I had scraped an individual item's nutrition values and the daily meals. Now the priority is to be able to loop through all the elements in the daily meals and food it into some function that spits out the nutrition values. At the end of the loop, I should have all the nutrition information for the day.

Looking at the JSON, it seems each item is defined by a series of ids. The bigger picture is that with the food's id, I can extract its nutritional value with another request to the server. To get the id, I need to be able to parse the bigger JSON.

After examining the JSON, it seems that the items in the elements are referred by 2 id's: mm_id and mm_rank

Looking at the JSON, it seems each item is defined by a series of ids. The bigger picture is that with the food's id, I can extract its nutritional value with another request to the server. To get the id, I need to be able to parse the bigger JSON.

After examining the JSON, it seems that the items in the elements are referred by 2 id's: mm_id and mm_rank

Friday, February 1, 2013

Reverse Engineering the Dartmouth Nutrition Menu Pt 1

This is an interesting project. I have been experiencing this problem with weight gain at the gym. I know if I don't eat enough food, I won't be able to lift as much and see results. Thinking that we naturally optimize what we measure, I thought it would be cool to create a food diary that scrapes information from the Dartmouth Nutrition Menu.

I would be able to document what I'm eating and see the associated macronutrients at the end of every meal. I could set my own calorie goals through certain foods and optimize that process. This would personally translate to seeing progress at the gym.

Here is the project: https://github.com/deloschang/foco-nutrition-scraper

--

Understanding how the nutrition menu works is the first priority. On the surface, it would appear that the macronutrients listed on the menu are images. Viewing the source only shows convoluted javascript functions. When clicking on items on the nutrition menu, the server is polled by a JSON-RPC request and returns relevant information about the macronutrients: everything from calories to vitamin intake.

To get the nutrition menu, I copied the JSON-RPC request from FireBug and channeled it into a Python URLLib parameters. This returned a full JSON payload of the nutrients.

To grab the list of the day's meal, I do the same with different methods and parameters observed from Firebug. Now I have a list of the food items served that day and a way to grab nutrients for a specific item.

Next, understanding how to loop over all the nutrients and create a comprehensive list is practical. Together, I can loop over the list and scrape all the information I need, storing it in a separate database.

Then have an Android app poll for the data from my server and present it to the user with some UI. There can be input boxes next to each food item and users can enter in their food intake. The application can use the information to provide entire macronutrient statistics for the day. Then, for everyday, you can track your food consumption and potentially show you related graphs.

Once I create the app, I should hedge against polling the server with too much traffic and instead move the data on my own server. Then, when students access the data, I can handle the traffic load instead and my own application can poll by the server in small intervals by itself.

I would be able to document what I'm eating and see the associated macronutrients at the end of every meal. I could set my own calorie goals through certain foods and optimize that process. This would personally translate to seeing progress at the gym.

Here is the project: https://github.com/deloschang/foco-nutrition-scraper

--

Understanding how the nutrition menu works is the first priority. On the surface, it would appear that the macronutrients listed on the menu are images. Viewing the source only shows convoluted javascript functions. When clicking on items on the nutrition menu, the server is polled by a JSON-RPC request and returns relevant information about the macronutrients: everything from calories to vitamin intake.

To get the nutrition menu, I copied the JSON-RPC request from FireBug and channeled it into a Python URLLib parameters. This returned a full JSON payload of the nutrients.

To grab the list of the day's meal, I do the same with different methods and parameters observed from Firebug. Now I have a list of the food items served that day and a way to grab nutrients for a specific item.

Next, understanding how to loop over all the nutrients and create a comprehensive list is practical. Together, I can loop over the list and scrape all the information I need, storing it in a separate database.

Then have an Android app poll for the data from my server and present it to the user with some UI. There can be input boxes next to each food item and users can enter in their food intake. The application can use the information to provide entire macronutrient statistics for the day. Then, for everyday, you can track your food consumption and potentially show you related graphs.

Once I create the app, I should hedge against polling the server with too much traffic and instead move the data on my own server. Then, when students access the data, I can handle the traffic load instead and my own application can poll by the server in small intervals by itself.

Monday, January 21, 2013

Cool Hack for the Planning Fallacy

I realized that people often exhibit planning fallacies. In other words, when it comes to estimating the amount of time it takes to finish Work X, we're often optimistic and allot too little of time. This would be relatively harmless if not for the fact that as Time T grows, the differentials between expected and actual time grows as well. So we plan too little time and the work goes into the backlog.

I thought of a cool little hack for solving this problem. It runs very similarly to how a startup should run: leanly and iteratively. The hack will take your Google Calendar events that you entered for how long it takes you to do X and calibrate a suggested time for you. Better yet, because the data keeps growing, it becomes more and more accurate for time.

So the process is like this:

(1) Say I finish Problem Set 1. I planned that it would take me 3 hours, but it actually took me 6 hours. Well, I record that onto my Google Calendar (the 6 hours it took me)

(2) Next time, with Problem Set 2, the app will note that it took 6 hours to complete the Problem Set and suggest 6 hours.

(3) As more data comes in, I can create a more and more accurate algorithm to parse the input and output a calibrated suggested value

https://developers.google.com/apps-script/service_calendar

I thought of a cool little hack for solving this problem. It runs very similarly to how a startup should run: leanly and iteratively. The hack will take your Google Calendar events that you entered for how long it takes you to do X and calibrate a suggested time for you. Better yet, because the data keeps growing, it becomes more and more accurate for time.

So the process is like this:

(1) Say I finish Problem Set 1. I planned that it would take me 3 hours, but it actually took me 6 hours. Well, I record that onto my Google Calendar (the 6 hours it took me)

(2) Next time, with Problem Set 2, the app will note that it took 6 hours to complete the Problem Set and suggest 6 hours.

(3) As more data comes in, I can create a more and more accurate algorithm to parse the input and output a calibrated suggested value

https://developers.google.com/apps-script/service_calendar

Subscribe to:

Posts (Atom)